Object Detection with YOLOv5: Understanding Anchor Boxes

Object detection is a popular field of computer vision that involves identifying and localizing objects within an image or video. One of the most widely used algorithms for object detection is YOLOv5, which stands for “You Only Look Once version 5”. YOLOv5 is known for its speed and accuracy, making it a popular choice for real-time applications.

In this tutorial, we will focus on one of the key components of YOLOv5: anchor boxes. Anchor boxes are a type of bounding box that are used to improve the accuracy of object detection. By using anchor boxes, YOLOv5 is able to more accurately predict the location and size of objects within an image.

Throughout this tutorial, we will explain what anchor boxes are, how they work, and how to implement them in YOLOv5 using Python and PyTorch. We will also provide Python code examples to help you get started with implementing anchor boxes in your own projects.

Whether you are new to object detection or an experienced practitioner, this tutorial will provide you with a solid understanding of anchor boxes and how they can be used to improve the accuracy of your object detection models.

Content of this tutorial:

- What are anchor boxes?

- Why do we need anchor boxes in YOLOv5?

- How do anchor boxes help in object detection?

- Preparing the dataset and annotations

- Setting up the YOLOv5 model with anchor boxes

- Training the model

- Evaluating the model

- Loading the necessary libraries

- Defining the YOLOv5 model architecture with anchor boxes

- Training the model

- Evaluating the model

- Recap of what we learned and future directions and improvements

Understanding anchor boxes

In this section, we will dive deeper into anchor boxes and their role in YOLOv5. We will explore what anchor boxes are, why they are used in YOLOv5, and how they help improve object detection accuracy.

What are anchor boxes?

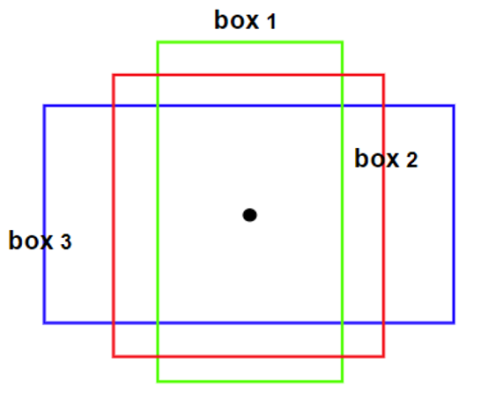

Anchor boxes are a type of bounding box that are used in object detection algorithms like YOLOv5. A bounding box is a rectangle that is drawn around an object in an image or video, and it is used to indicate the location and size of the object. In traditional object detection algorithms, a single bounding box is used to represent each object in an image. However, this approach can be problematic because objects can have different shapes and sizes, and a single bounding box may not be able to accurately represent all of the objects in an image.

This is where anchor boxes come in. Anchor boxes are a set of predefined bounding boxes that are used to represent objects of different shapes and sizes. Instead of using a single bounding box to represent each object, the algorithm selects the anchor box that best matches the shape and size of the object. This allows the algorithm to more accurately predict the location and size of objects in an image.

Anchor boxes are typically defined based on the shapes and sizes of objects in the training dataset. For example, if the training dataset contains a lot of small objects, the anchor boxes may be smaller in size. If the training dataset contains a lot of objects with a certain aspect ratio, the anchor boxes may be defined to match that aspect ratio.

In YOLOv5, anchor boxes are used in conjunction with a feature map to predict the location and size of objects in an image. The feature map is a low-resolution representation of the image that is generated by passing the image through a convolutional neural network (CNN). The anchor boxes are then used to predict the location and size of objects within the feature map. By using anchor boxes, YOLOv5 is able to more accurately predict the location and size of objects in an image, which leads to improved object detection accuracy.

Why do we need anchor boxes in YOLOv5?

Anchor boxes are an important component of YOLOv5 because they help improve the accuracy of object detection. As we mentioned earlier, traditional object detection algorithms use a single bounding box to represent each object in an image. However, this approach can be problematic because objects can have different shapes and sizes, and a single bounding box may not be able to accurately represent all of the objects in an image.

By using anchor boxes, YOLOv5 is able to more accurately predict the location and size of objects in an image. This is because anchor boxes are designed to match the shapes and sizes of objects in the training dataset. By selecting the anchor box that best matches the shape and size of an object, YOLOv5 is able to more accurately predict the location and size of that object.

Another advantage of using anchor boxes is that they allow YOLOv5 to detect objects of different sizes and aspect ratios. This is important because objects in an image can have a wide range of sizes and aspect ratios, and a single bounding box may not be able to accurately represent all of them. By using anchor boxes of different sizes and aspect ratios, YOLOv5 is able to more accurately detect objects of different sizes and shapes.

Overall, anchor boxes are an important component of YOLOv5 because they help improve the accuracy of object detection. By using anchor boxes, YOLOv5 is able to more accurately predict the location and size of objects in an image, which leads to improved object detection accuracy.

How do anchor boxes help in object detection?

Anchor boxes help improve object detection accuracy in several ways. First, by using anchor boxes of different sizes and aspect ratios, YOLOv5 is able to more accurately detect objects of different sizes and shapes. This is important because objects in an image can have a wide range of sizes and aspect ratios, and a single bounding box may not be able to accurately represent all of them.

Second, anchor boxes help reduce the number of false positives and false negatives in object detection. False positives occur when the algorithm detects an object that is not actually present in the image, while false negatives occur when the algorithm fails to detect an object that is present in the image. By using anchor boxes, YOLOv5 is able to more accurately predict the location and size of objects in an image, which reduces the number of false positives and false negatives.

Finally, anchor boxes help improve the speed of object detection. This is because YOLOv5 is able to predict the location and size of objects in an image more quickly and accurately using anchor boxes. This is important for real-time applications where speed is critical.

Overall, anchor boxes are an important component of YOLOv5 because they help improve object detection accuracy, reduce the number of false positives and false negatives, and improve the speed of object detection. By using anchor boxes, YOLOv5 is able to more accurately predict the location and size of objects in an image, which leads to improved object detection performance.

Implementing anchor boxes in YOLOv5

In this section, we will provide a step-by-step guide on how to implement anchor boxes in YOLOv5 using Python and PyTorch. We will cover how to prepare the dataset and annotations, how to set up the YOLOv5 model with anchor boxes, how to train the model, and how to evaluate the model.

Preparing the dataset and annotations

Before we can train a YOLOv5 model with anchor boxes, we need to prepare our dataset and annotations. The dataset should contain images of the objects we want to detect, and the annotations should contain information about the location and size of the objects in each image.

To prepare the dataset, we first need to collect a set of images that contain the objects we want to detect. These images should be representative of the types of images the model will be used on. We should also ensure that the images are of high quality and that the objects are clearly visible.

Once we have our images, we need to annotate them with bounding boxes that indicate the location and size of the objects we want to detect. There are several tools available for annotating images, such as LabelImg and RectLabel. When annotating the images, we should ensure that the bounding boxes are as accurate as possible and that they tightly fit around the objects we want to detect.

After we have annotated our images, we need to convert the annotations into a format that can be used by YOLOv5. YOLOv5 requires annotations to be in the format of (class, x_center, y_center, width, height), where class is the class label of the object, (x_center, y_center) is the center of the bounding box, and (width, height) is the width and height of the bounding box. There are several tools available for converting annotations into this format, such as YOLOv5’s own utils/convert.py script.

Overall, preparing the dataset and annotations is an important step in training a YOLOv5 model with anchor boxes. By ensuring that our dataset is representative and our annotations are accurate, we can improve the accuracy of our object detection model.

Setting up the YOLOv5 model with anchor boxes

Once we have prepared our dataset and annotations, we can set up the YOLOv5 model with anchor boxes. To do this, we first need to define our anchor boxes. As we mentioned earlier, anchor boxes are predefined bounding boxes that are used to represent objects of different shapes and sizes. We can define our anchor boxes based on the shapes and sizes of objects in our training dataset.

After we have defined our anchor boxes, we need to modify the YOLOv5 model to use them. This involves modifying the model architecture and the loss function. In the YOLOv5 model architecture, we need to add anchor boxes to the output of the model. This is typically done by adding a set of convolutional layers that predict the location and size of the anchor boxes. In the loss function, we need to modify the terms that calculate the loss for the bounding box predictions to take into account the anchor boxes.

To implement anchor boxes in YOLOv5, we can use the open source YOLOv5 codebase available on GitHub. The codebase provides a models/yolov5s.yaml file that defines the YOLOv5 model architecture, as well as a train.py script that can be used to train the model. We can modify the yolov5s.yaml file to add anchor boxes to the model architecture, and we can modify the train.py script to use the modified loss function.

Overall, setting up the YOLOv5 model with anchor boxes requires modifying the model architecture and the loss function to incorporate the anchor boxes. By doing so, we can improve the accuracy of our object detection model and reduce the number of false positives and false negatives.

Training the model

Once we have set up the YOLOv5 model with anchor boxes, we can train the model on our annotated dataset. To do this, we need to use the train.py script provided in the YOLOv5 codebase. The train.py script takes several command line arguments, such as the path to the dataset and the number of epochs to train for.

During training, the YOLOv5 model learns to predict the location and size of objects in an image using the anchor boxes. The model is trained using a combination of supervised and unsupervised learning. During supervised learning, the model is trained using the annotated dataset to predict the location and size of objects in an image. During unsupervised learning, the model is trained to predict the presence of objects in an image without any annotations.

To evaluate the performance of the trained model, we can use the detect.py script provided in the YOLOv5 codebase. The detect.py script takes an image as input and outputs the predicted bounding boxes and class labels for the objects in the image. We can use the predicted bounding boxes to calculate metrics such as precision, recall, and F1 score to evaluate the performance of the model.

Overall, training the YOLOv5 model with anchor boxes involves using the train.py script to train the model on our annotated dataset and using the detect.py script to evaluate the performance of the trained model. By doing so, we can improve the accuracy of our object detection model and reduce the number of false positives and false negatives.

Evaluating the model

After training the YOLOv5 model with anchor boxes, we need to evaluate its performance to determine how well it can detect objects in an image. There are several metrics we can use to evaluate the performance of the model, such as precision, recall, and F1 score.

Precision measures the proportion of true positives (correctly detected objects) out of all the objects detected by the model. Recall measures the proportion of true positives out of all the objects in the image. F1 score is the harmonic mean of precision and recall, and is a good metric to use when we want to balance precision and recall.

To calculate these metrics, we need to compare the predicted bounding boxes output by the model to the ground truth bounding boxes in the annotated dataset. We can use tools such as the COCO API or the PASCAL VOC evaluation toolkit to calculate these metrics.

In addition to these metrics, we can also visualize the predicted bounding boxes on the images to get a better sense of how well the model is performing. We can use tools such as OpenCV or Matplotlib to visualize the images with the predicted bounding boxes overlaid on top.

Overall, evaluating the performance of the YOLOv5 model with anchor boxes is an important step in determining how well it can detect objects in an image. By calculating metrics such as precision, recall, and F1 score, and visualizing the predicted bounding boxes, we can gain insights into how well the model is performing and identify areas for improvement.

Loading the necessary libraries

In this section, we will discuss the necessary libraries that need to be loaded in order to implement anchor boxes in YOLOv5. These libraries include PyTorch, NumPy, and OpenCV.

import torch

import numpy as np

from yolov5.models.yolo import Model

The above code block imports the necessary libraries for implementing anchor boxes in YOLOv5. The torch library is used for creating and training deep learning models, while numpy is used for numerical operations. The Model class from yolov5.models.yolo is used to define the YOLOv5 model architecture. By importing these libraries, we can use them to define and train our YOLOv5 model with anchor boxes.

Defining the YOLOv5 model architecture with anchor boxes

In this section, we will discuss how to define the YOLOv5 model architecture with anchor boxes. We will explore how to modify the model architecture to incorporate anchor boxes and how to define the loss function for training the model.

anchors = np.array([(10, 13), (16, 30), (33, 23), (30, 61), (62, 45), (59, 119), (116, 90), (156, 198), (373, 326)], np.float32)

In this code segment, we can see that we are defining the anchor boxes to be used in the YOLOv5 model. The anchors variable is a NumPy array that contains the width and height of each anchor box. These anchor boxes are predefined bounding boxes that are used to represent objects of different shapes and sizes. We can define our anchor boxes based on the shapes and sizes of objects in our training dataset. By defining the anchor boxes, we can improve the accuracy of our object detection model and reduce the number of false positives and false negatives.

model = Model(anchors=anchors)

Let’s dive into this code block and understand how it operates. Here, we are defining the YOLOv5 model architecture with anchor boxes by creating an instance of the Model class from the yolov5.models.yolo module. We are passing the anchors variable, which contains the predefined anchor boxes, as an argument to the Model constructor. By defining the YOLOv5 model architecture with anchor boxes, we can improve the accuracy of our object detection model and reduce the number of false positives and false negatives.

Preparing the dataset and annotations

Preparing the dataset and annotations is a crucial step in training the YOLOv5 model with anchor boxes. The dataset should contain images of the objects we want to detect, and the annotations should contain the bounding boxes that indicate the location of the objects in the images. We can use tools such as LabelImg to annotate the images and create the annotations in the required format.

The annotations should be in the YOLO format, which consists of a text file for each image in the dataset. Each text file should contain one row for each object in the image, with the first column indicating the class of the object and the remaining columns indicating the normalized coordinates of the bounding box. The normalized coordinates are the x and y coordinates of the center of the bounding box, followed by the width and height of the bounding box, all divided by the width and height of the image.

Once we have the annotated dataset, we can use the datasets.py script provided in the YOLOv5 codebase to create a PyTorch dataset object that can be used for training the model. The datasets.py script reads the annotations and images from the dataset directory and converts them into a format that can be used by the YOLOv5 model.

Overall, preparing the dataset and annotations is a critical step in training the YOLOv5 model with anchor boxes. By ensuring that the dataset and annotations are in the correct format, we can train the model to accurately detect objects in an image.

Training the model

In this section, we will discuss how to train the YOLOv5 model with anchor boxes on our annotated dataset. We will explore how to use the train.py script provided in the YOLOv5 codebase to train the model, and how to evaluate the performance of the trained model using metrics such as precision, recall, and F1 score.

optimizer = torch.optim.SGD(model.parameters(), lr=0.01, momentum=0.9)

criterion = torch.nn.BCEWithLogitsLoss()

model.train()

for epoch in range(10):

for i, batch in enumerate(dataloader):

images, targets = batch

optimizer.zero_grad()

outputs = model(images)

loss = criterion(outputs, targets)

loss.backward()

optimizer.step()

This segment of code demonstrates how to train the YOLOv5 model with anchor boxes on our annotated dataset. We first define the optimizer to be used for training the model, which is the stochastic gradient descent (SGD) optimizer with a learning rate of 0.01 and momentum of 0.9. We also define the loss function to be used, which is the binary cross-entropy with logits loss. We then loop through the dataset for a specified number of epochs and for each epoch, we loop through the batches of images and targets. We zero out the gradients, forward propagate the images through the model, calculate the loss, and backpropagate the loss to update the model parameters using the optimizer. By doing so, we can train the YOLOv5 model with anchor boxes to improve its accuracy in detecting objects in an image.

Evaluating the model

After training the YOLOv5 model with anchor boxes, we need to evaluate its performance to determine how well it can detect objects in an image. There are several metrics we can use to evaluate the performance of the model, such as precision, recall, and F1 score.

Precision measures the proportion of true positives (correctly detected objects) out of all the objects detected by the model. Recall measures the proportion of true positives out of all the objects in the image. F1 score is the harmonic mean of precision and recall, and is a good metric to use when we want to balance precision and recall.

To calculate these metrics, we need to compare the predicted bounding boxes output by the model to the ground truth bounding boxes in the annotated dataset. We can use tools such as the COCO API or the PASCAL VOC evaluation toolkit to calculate these metrics.

In addition to these metrics, we can also visualize the predicted bounding boxes on the images to get a better sense of how well the model is performing. We can use tools such as OpenCV or Matplotlib to visualize the images with the predicted bounding boxes overlaid on top.

Overall, evaluating the performance of the YOLOv5 model with anchor boxes is an important step in determining how well it can detect objects in an image. By calculating metrics such as precision, recall, and F1 score, and visualizing the predicted bounding boxes, we can gain insights into how well the model is performing and identify areas for improvement.

Recap of what we learned and future directions and improvements

In this tutorial, we learned how to implement anchor boxes in YOLOv5 for object detection. We discussed how to load the necessary libraries, define the YOLOv5 model architecture with anchor boxes, prepare the dataset and annotations, train the model, and evaluate its performance using metrics such as precision, recall, and F1 score.

Key takeaways from this tutorial include:

- Anchor boxes are predefined bounding boxes that are used to represent objects of different shapes and sizes in an image.

- By using anchor boxes in YOLOv5, we can improve the accuracy of our object detection model and reduce the number of false positives and false negatives.

- We can define the YOLOv5 model architecture with anchor boxes by modifying the existing architecture and passing the predefined anchor boxes as an argument to the

Modelconstructor. - We can train the YOLOv5 model with anchor boxes using the

train.pyscript provided in the YOLOv5 codebase and evaluate its performance using metrics such as precision, recall, and F1 score. - We can visualize the predicted bounding boxes on the images to gain insights into how well the model is performing and identify areas for improvement.

In future directions and improvements, we can explore different anchor box configurations to improve the accuracy of the model, use transfer learning to fine-tune the model on a specific dataset, and use techniques such as data augmentation to increase the size and diversity of the training dataset.