In recent years, AI has been taking off and became a topic that is frequently making it into the news. But why is that actually?

AI research has started in the mid-twentieth century when mathematician Alan Turing asked the question “Can Machines Think?” in a famous paper in 1950. However, it’s been not until the 21st century that Artificial Intelligence has shaped real-world applications that are impacting billions of people and most industries across the globe.

Top 4 Most Popular Ai Articles:

3. Real vs Fake Tweet Detection using a BERT Transformer Model in few lines of code

In this article, we will explore the reasons behind the meteoric rise of AI. Essentially, it boils down to 6 key factors that are creating an incredibly powerful environment that enables AI researchers & practitioners to build prediction systems from recommending movies (Netflix) to enabling self-driving cars (Waymo) to making speech recognition (Alexa).

The following 6 factors are powering the AI Revolution:

- Big Data

- Processing Power

- Connected Globe

- Open-Source Software

- Improved Algorithms

- Accelerating Returns

Let’s dive into each of those and get to the details of why exactly they are making a difference and how they are influencing the rapid progress in AI.

Big Data

First things first. Here’s a formal definition of Big Data from research and advisory company Gartner:

“Big data is high-volume, high-velocity, and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation.”

Put simply, computers, the internet, smartphones, and other devices have given us access and generated vast amounts of data. And the amount is going to grow rapidly in the future as trillions of sensors are deployed in appliances, packages, clothing, autonomous vehicles, and elsewhere.

Data is becoming so large and complex that it is difficult or almost impossible to process it using traditional methods. This is where Machine Learning and Deep Learning come into play as AI-assisted processing of data allows us to better discover historical patterns, predict more efficiently, make more effective recommendations, and much more.

Also, as a side note: Data can be broadly put into 2 buckets. (1) Structured and (2) Unstructured Data. Structured data is organized and formatted in a way so it’s easily searchable in relational databases. In contrast, unstructured data has no pre-defined format such as videos or images, making it much more difficult to collect, process, and analyze.

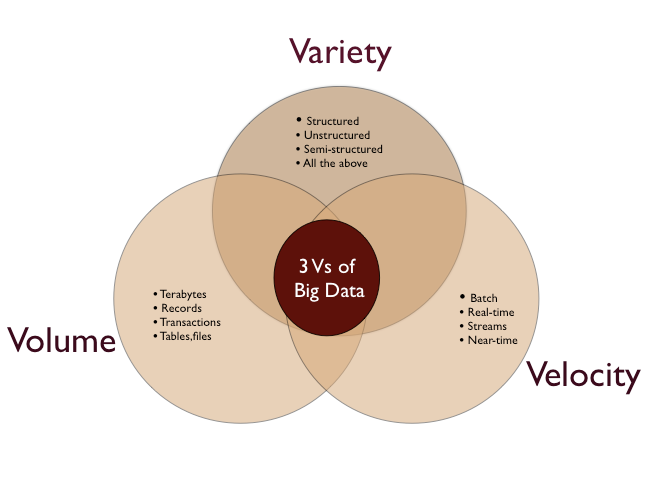

One framework that is often used to describe Big Data is The Three Vs which stands for Volume, Velocity, and Variety.

Volume

The amount of data matters. With big data, you’ll have to process high volumes of low-density, unstructured data. This can be data of unknown value, such as Twitter data feeds, clickstreams on a webpage or a mobile app, or sensor-enabled equipment. For some organizations, this might be tens of terabytes of data. For others, it may be hundreds of petabytes.

Velocity

Velocity is the fast rate at which data is received and (perhaps) acted on. Normally, the highest velocity of data streams directly into memory versus being written to disk. Some internet-enabled smart products operate in real-time or near real-time and will require real-time evaluation and action.

Variety

Variety refers to the many types of data that are available. Traditional data types were structured and fit neatly in a relational database. With the rise of big data, data comes in new unstructured data types. Unstructured and semi-structured data types, such as text, audio, and video, require additional preprocessing to derive meaning and support metadata.

Processing Power

Gordon Moore, the co-founder of Intel Corporation, is the author of Moore’s law which says that the number of transistors on a microchip doubles every two years. This has led to the increased computing power of processors over the last 50+ years. However, new technologies have emerged which are accelerating compute, specifically for AI-related applications, even more.

One is the Graphical Processing Units (GPUs). GPUs can have hundreds and even thousands of more cores than a Central Processing Unit (CPU). The GPUs strength is processing multiple computations simultaneously which was mainly used in graphic-intensive computer games so far. But the computation of multiple parallel processes is what especially Deep Learning requires to manage large data sets to improve prediction quality.

Nvidia is the market leader in GPUs. Check their website if you’re interested in learning more about the products and AI solutions.

The second technology is Cloud Computing. AI researchers & practitioners don’t have to rely on the computing power of their local machines, but can actually “outsource” the processing of their models to cloud services such as AWS, Google Cloud, Microsoft Azure, or IBM Watson.

Of course, those services don’t come for free and can become actually fairly expensive depending on the complexity of the Deep Learning model that needs to be trained. Nonetheless, individuals and companies with the budget have now an option to access massive processing power that was just not available in the past. This gives AI a huge boost.

Lastly, a complete new set of Dedicated Processors start to emerge that are designed for AI applications from ground up.

One example is the Tensor Processing Unit (TPU) developed by Google. Those processors are particularly using Google’s own TensorFlow software and speed up the compute of neural networks significantly.

There’s a large number of hardware startups going after that big opportunity of creating dedicated chips that accelerate AI. Just to list a few: Graphcore, Cerebras, Hailo, Nuvia, or Groq. Check them out if you’re interested in those specialized processors. They could become a game-changer for AI.

Connected Globe

Thanks to the internet and smartphones, humans are more connected than ever. Of course, this hyper-connectivity also comes with a few downsides, but overall, it has been a huge benefit to society and individuals. Access to information and knowledge through social media platforms as well as online communities around various topics are now available to everyone.

And the Connected Globe is supporting the rise of AI. Information about the latest research & applications is spreading easily and sharing of knowledge is absolutely encouraged. Also, there are AI-related communities that are developing open-source tools and sharing their work, so more people can benefit from everyone’s learnings and findings.

For example, Medium hosts many publications that are targeted at an AI audience, and a large number of individuals share helpful insights. One can also find amazing resources on Twitter posted by top AI researchers and practitioners who are making big impact in the field. And of course GitHub — a massive community of software developers who share projects, code, etc.

At the end, everyone (even the big tech companies like Google and Facebook) knows that AI is too complex to be solved by individuals or single companies. That insight encourages sharing and collaboration, which can be done much more effectively than ever before through interconnected communities.

Open-Source Software

Let’s start with a definition: “Open source software is software with source code that anyone can inspect, modify, and enhance.” For example, Linux is probably the best-known and most-used open source operating system and had huge influence on the acceptance and usage of open source software.

Open-source software and data are accelerating the development of AI tremendously. In particular, open-source Machine Learning standards and libraries such as Scikit-learn or TensorFlow support the execution of complex tasks and allow the focus on the conceptual problem solving instead of the technical implementation. Overall, an open-source approach mean less time spent on routine coding, increased industry standardization and a wider application of emerging AI tools.

Check this article on Analytics Vidhya for an overview of 21 different open source Machine Learning tools and frameworks. They grouped them in the following 5 different categories:

- Open Source Machine Learning Tools for Non-Programmers

- Machine Learning Model Deployment

- Big Data Open Source Tools

- Computer Vision, NLP, and Audio

- Reinforcement Learning

Improved Algorithms

AI researchers have made significant advances in algorithms and techniques that contribute to better predictions and completely new applications.

Particularly Deep Learning (DL), which involves layers of neural networks, designed in a fashion inspired by the human brain’s approach to processing information, has seen massive breakthroughs in the last few years. For example, Natural Language Processing (NLP) and Computer Vision (CV) are completely powered by DL and used in many real-world applications from Search Engines to Autonomous Vehicles.

Another emerging area of research is Reinforcement Learning (RL) in which the AI agent learns with little or no initial input data, by trial and error optimized by a reward function. Google’s subsidiary DeepMind is one of the leading AI research companies and has used RL mainly in computer games so far demonstrating the potential. The famous AlphaGo algorithm beat top human Go player Lee Sedol in a match in 2016. Something that many people didn’t expect to happen so soon, because of the complexity of the game.

There are many other AI research labs such as OpenAI, Facebook AI, and universities that keep pushing the envelope and make constantly new AI discoveries that trickle down to actual industry applications over time.

Accelerating Returns

Artificial Intelligence, Machine Learning, and Deep Learning are not mere theories and concepts anymore, but actually boost the competitive advantage of companies and increase their productivity. Competitive pressures force companies to use AI systems in their products and services.

For example, most consumer companies leverage AI nowadays for personalization and recommendation. Think about Netflix or Amazon. And pharmaceutical companies such as Pfizer are now able to shorten the drug development process from a decade to just a few years using AI.

Those huge returns encourage companies to invest even more resources in R&D and developing new applications that take advantage of the possibilities of Artificial Intelligence and make it better — a self-reinforcing cycle.

Conclusion

We identified six factors that contribute to the AI revolution: Big Data, Processing Power, Connected Globe, Open-Source Software, Improved Algorithms, and Accelerating Returns.

Those factors are converging and a lot more development and innovation is continue to happen that will keep accelerating the impact of AI.