I. Introduction

Bidirectional Encoder Representations from Transformers, or BERT, is a revolutionary self-supervised machine learning model that uses transformers and bidirectional training to achieve state-of-the-art results in a wide array of Natural Language Processing (NLP) tasks. One of these tasks, text classification, can be seen in real-world applications like spam filtering, sentiment analysis, and tagging customer queries. In this tutorial, we’ll focus on building a text classification model using BERT.

II. Understanding BERT

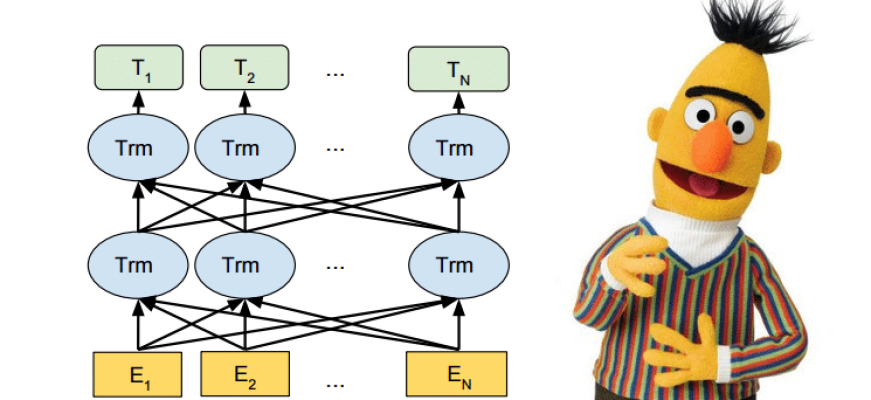

BERT is a transformer-based machine learning technique for NLP pre-training. Developed by Google, BERT understands the context of every word in a sentence by looking at the words that come before and after it—this is the essence of bidirectional training.

Unlike older models, BERT takes into account the full context of a word by looking at the words that come before and after it—using the transformer architecture to handle this context. This context-awareness is a key aspect of why BERT outperforms previous models.

III. Setting up the Environment

First, we need to set up our environment. We will be using PyTorch, a popular deep learning library, and Transformers, a library by Hugging Face that provides pre-trained NLP models. If you haven’t installed them yet, you can do so using pip:

!pip install torch

!pip install transformersIV. Preprocessing the Text Data

BERT requires specific formatting of our text data. First, each sentence must be tokenized into tokens (words). Next, these tokens are mapped to their respective IDs, which are pre-defined in the BERT’s vocabulary.

Additionally, BERT requires special tokens—[CLS] at the beginning of our text and [SEP] at the end. A segment ID for each token is also needed (a sequence of 0s for one-sentence inputs). Lastly, an attention mask that differentiates relevant tokens (1) from padding tokens (0) is required.

Here’s how to carry out these steps with transformers:

from transformers import BertTokenizer

# Load the BERT tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased', do_lower_case=True)

# Tokenize the sentence

input_ids = tokenizer.encode('Hello, this is a BERT tutorial!', add_special_tokens=True)

print('Input IDs:', input_ids)V. Loading and Understanding the BERT Model

Now that we understand our text data, let’s load our pre-trained BERT model. We’ll use the ‘bert-base-uncased’ model, which is the smaller version of the two available (the other being ‘bert-large-uncased’) and ignores casing.

from transformers import BertModel

# Load pre-trained model

model = BertModel.from_pretrained('bert-base-uncased')

# Put the model in evaluation mode

model.eval()VI. Preparing the Dataset

For this tutorial, we’ll use the IMDB movie reviews dataset for sentiment analysis. It contains 25,000 movie reviews for training and 25,000 for testing.

After loading the data, we will encode our labels (positive: 1, negative: 0) and split our dataset into training, validation, and testing sets.

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

# Encode labels

encoder = LabelEncoder()

labels = encoder.fit_transform(labels)

# Split data

train_texts, temp_texts, train_labels, temp_labels = train_test_split(texts, labels, stratify=labels, test_size=0.3)

val_texts, test_texts, val_labels, test_labels = train_test_split(temp_texts, temp_labels, stratify=temp_labels, test_size=0.5)

**VII. Fine-tuning BERT for Text Classification**

Now, let's fine-tune our BERT model. We do this by adding a new layer for classification, known as the classifier layer, to our pre-trained model. This classifier layer will be trained on our specific task (sentiment analysis), while the rest of the BERT model will be fine-tuned.

```python

from transformers import BertForSequenceClassification

# Load BertForSequenceClassification, the pretrained BERT model with a single

# linear classification layer on top.

model = BertForSequenceClassification.from_pretrained(

"bert-base-uncased", # Use the 12-layer BERT model, with an uncased vocab.

num_labels = 2, # The number of output labels--2 for binary classification.

output_attentions = False, # Whether the model returns attentions weights.

output_hidden_states = False, # Whether the model returns all hidden-states.

)Next, we’ll set up our optimizer and learning rate, then begin the training process.

from transformers import AdamW

# Get all of the model's parameters as a list of tuples.

params = list(model.named_parameters())

# Note: AdamW is a class from the huggingface library (as opposed to pytorch)

# I believe the 'W' stands for 'Weight Decay fix"

optimizer = AdamW(model.parameters(),

lr = 2e-5, # args.learning_rate - default is 5e-5, our notebook had 2e-5

eps = 1e-8 # args.adam_epsilon - default is 1e-8.

)

#... insert your training loop here ...VIII. Evaluating the Model

To see how well our model is doing, we can compute the accuracy of our model on the validation set. We’ll use a batch size of 32.

from sklearn.metrics import accuracy_score

from torch.utils.data import DataLoader

# Create the DataLoader for our validation set.

val_dataloader = DataLoader(

val_dataset, # The validation samples.

sampler = SequentialSampler(val_dataset), # Pull out batches sequentially.

batch_size = 32 # Evaluate with this batch size.

)

# Put model in evaluation mode

model.eval()

# Tracking variables

eval_accuracy = 0

nb_eval_steps = 0

# Evaluate data for one epoch

for batch in val_dataloader:

with torch.no_grad():

outputs = model(batch)

# Get the "logits" output by the model

logits = outputs[0]

# Move logits and labels to CPU

logits = logits.detach().cpu().numpy()

label_ids = b_labels.to('cpu').numpy()

tmp_eval_accuracy = flat_accuracy(logits, label_ids)

eval_accuracy += tmp_eval_accuracy

nb_eval_steps += 1

print("Validation Accuracy: {}".format(eval_accuracy/nb_eval_steps))IX. Testing and Making Predictions

Now we can use our trained model to make predictions on new text. Let’s predict the sentiment of the following sentence: “This tutorial is really helpful!”

# Prepare our text into the BERT input format

input_ids = torch.tensor([tokenizer.encode("This tutorial is really helpful!")])

# Get the model's predictions

with torch.no_grad():

logits = model(input_ids)

# Get the predicted class

predicted_class = torch.argmax(logits).item()

print("Predicted class:", predicted_class)X. Conclusion

That’s it! You’ve just fine-tuned a BERT model for text classification. In this tutorial, we’ve covered how to preprocess text data, load a pre-trained BERT model, fine-tune it on a text classification task, and make predictions on new, unseen data.

Remember that BERT is a powerful tool that can greatly enhance the performance of various NLP tasks. However, it’s not a silver bullet. Depending on the specifics of your task, there might be other models or approaches that are more appropriate. Don’t hesitate to explore other transformer-based models like XLM, GPT-2, RoBERTa, or DistilBERT.

XI. References and Additional Resources

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

- Hugging Face Transformers library

- BERT Fine-Tuning Tutorial with PyTorch

- The Illustrated BERT, ELMo, and co.

Feel free to dive into these resources to learn more about BERT and other transformer models, and explore the immense capabilities they offer in the field of NLP. Happy modeling!